Dakota Fusion Race Car Watch photo

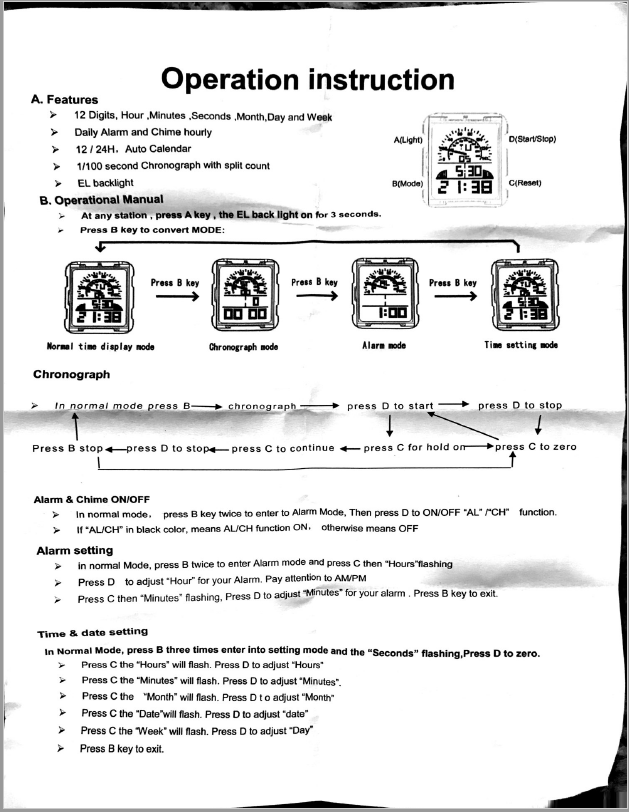

Watch instructions

The band looks like a tire tread

On a few of my Windows 10 computers, Windows Defender was failing to update properly through Windows Update. I found that you can manually update Windows Defender using this command from a terminal window:

|

1 |

"%programfiles%\windows defender\mpcmdrun.exe" -signatureupdate -http |

I found this instruction in the comments of this post: https://answers.microsoft.com/en-us/protect/forum/protect_defender-protect_updating/definition-update-for-windows-defender-kb2267602/d0d06c7a-fd70-4f27-94a6-9320d8114768

|

1 |

net accounts /maxpwage:unlimited |

Thanks @hansb1!

You can see his answer given in a comment to this Bleeping Computer post: https://www.bleepingcomputer.com/forums/t/565081/cannot-set-password-never-expires-server-2012/

It’s actually pretty easy to throw a SQL query against Sql Server using PowerShell. This would be very familiar to any C# or FoxPro developer, so don’t let the mystery of Power Shell scare you away from exploring this handy tool.

Here’s a tiny code snippet to show you how simple it is:

Note that the call to the DoSql() function is a wrapper function that I wrote which creates a few PowerShell objects to do all the low level work so that you don’t have to repeat all that connection and plumbing stuff in your scripts. Once you have that in place, executing Sql queries is a piece of cake. After you get back the query results, PowerShell has all the standard language features you’d expect such as looping and counting, and even some cool sorting and filtering tricks that are pretty handy for working with the data rows.

Helper functions

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

function DoSql($sql) { # https://cmatskas.com/execute-sql-query-with-powershell/ $SqlConnection = GetSqlConnection $SqlCmd = New-Object System.Data.SqlClient.SqlCommand $SqlCmd.CommandText = $sql $SqlCmd.Connection = $SqlConnection $Reader= $SqlCmd.ExecuteReader() $DataTable = New-Object System.Data.DataTable $DataTable.Load($Reader) $SqlConnection.Close() return $DataTable } |

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

function GetSqlConnection { $sqlServerInstance = "192.168.0.99\SQLExpress" $database = "MyDB" $userName = "UserName" $password = "Password" $ConnectionString = "Server=$sqlServerInstance; database=$database; Integrated Security=False;" + "User ID=$userName; Password=$password;" try { $sqlConnection = New-Object System.Data.SqlClient.SqlConnection $ConnectionString $sqlConnection.Open() return $sqlConnection } catch { return $null } } |

From here, it becomes really easy to add system admin things shooting off an email, or calling a Stored Proc, or writing a Windows Event log entry, or call an external API, or blah-blah-blah.

Every modern version of Windows has PowerShell pre-installed, and it even has a simple IDE (Called PowerShell ISE ) to give you a coding environment with intellisense, code completion, and debugging tools.

Note: I found this article very helpful in learning how to do this: https://cmatskas.com/execute-sql-query-with-powershell/

Download from git repo here: https://bitbucket.org/mattslay/foxpro_message_queue

Job Queues, Message Queues, Message Broker, Asynchronous Processing… There are many names for this very helpful architecture where you take a process that holds up a user’s workstation for several seconds, or even minutes, and move the work to another machine (i.e. server or other workstation) to be processed offline, “in the background”, aka “asynchronously”, either immediately or at some later time.

Using some helpful classes from the West Wind Web Connection framework (also available in the West Wind Client Tools package) we can implement a robust Message Queue solution in Visual FoxPro. All it takes is two simple steps:

Step 1 – Submit a “Message”: Create and submit a simple Message record to the QueueMessageItems database table which has the name of the Process or Action that you want to execute, along with any required parameters, values, settings, user selections, other other criteria required to complete the task. Boom… in a blink the local user workflow is finished and they think your system is the fastest thing in the world, because now their computer is free in a few milliseconds and they can resume other tasks.

Step 2 – Process the “Message”: Down the hall, you are running a Message Queue Processor on a server or other workstation that can access the database, network drives, printers, internet, make API calls, etc needed to complete the task. Once the Message (i.e. task) is picked up by the Processor it is marked as Started, and when all the processing is finished the record is marked as Complete. Along the way you can send other other messages, emails, SMS text messages, reject messages, etc.

A simple UI form allows you to observer all the Message processing as it happens.

Another thing this allows is that on the “server” (i.e. whatever machine is running the Message Processor app) , you can actually launch MULTIPLE instances of the Message Processor, and that lets it run essentially achieve the performance of a multi-threaded app because you are processing several Messages simultaneously. FoxPro’s single-threaded architecture can be circumvented with this approach. No need to wait for sequential processing of each Message. When running on Sql Server, it calls a Stored Proc on GetNextMessage() and it also flags the record as in-process so that other running instances will not pick up the Message.

I recently used Message Queue to solve this nagging problem… When printing a particular report from a form in our FoxPro app, the form (and user) is held up for about 3-4 seconds while the report renders and spools. That doesn’t sound like much, but I *hate* waiting that amount of time every time we print a new Shop Order to sent out to production. It happens dozens of times per day for several users.

So… Using the West Wind Message Queue, it now submits a Print Request Message to the Message Queue, which takes about 30-50 milliseconds, and the user form is never held up at all waiting for the report to print. The Queue Message Manager (which is a VFP app running on the server), picks up the Message and prints the report.

Conveniently, it prints to the default printer defined on the server, which is the main printer central to all users, which is the same one that is the default printer on everyone’s desktop. If you needed to send the report to a specific printer, you’d just have to add that printer name using loAsync.SetProperty(), then do Set Printer in the Queue Manager code to control where the report comes out.

Consider this asynchronous architecture if you any such hold-up, or long-running-processes that your users wastefully wait for each day on their job.

You can read Rick Strahl’s extensive whitepaper on his wwAsync class. (Note: the whitepaper is kind of old, and the focus there is on how his Web Connection framework uses the wwAsync class to handle back end processing in web apps, but using this on a LAN or desktop/Client Applicaiton works exactly the same way.) Documentation of each method and property can be found here.

There’s also a more robust .Net version of this same framework. It has even more features for managing multiple threads, multiple Queue, and much better live GUI that runs in in a web browser. It’s an open source project on GitHub : https://github.com/RickStrahl/Westwind.QueueMessageManager

The required parameters, values, user choices, etc, are stored on the Message record in an XML field using the SetProperty() method when creating the Message, and can be retrieved using the GetProperty() method when processing a record.

XML example of data stored on a Queue Message:

Here’s what the message look like in the QueueMessageItems table:

Here is the data structure of the fields used on the QueueMessageItems table. Some of the fields are managed by the Message Manager class, several are available for your use in constructing and processing the message.

I have a VFP9 app that runs against Sql Server. When I run the forms inside the FoxPro IDE, running against the production Sql Server database located on a central server down the hall (i.e. NOT on my local developer machine), all the forms load up and run very quickly.

However, once compiled into an exe and running from the same developer machine, the forms take about *FIVE* times as long to load up. This is very consistent across all forms. The difference is 1.5 seconds load time in the IDE versus 7 seconds load time when running from an EXE.

Is there a particular reason that forms would run more quickly inside the FoxPro IDE than when compiled into an EXE?

Some background: A basic form in Visual FoxPro 9 my app will create between 30-40 Business Objects when it loads up (yes, those really cool kind of Sql Server Business Objects based on of the West Wind wwBusiness framework). In our system, like most others, an Order has a Customer, and Contact, and ShipToAddress, and vast collection of child items (i.e. cursors), such as LineItems, LaborRecords, MaterialRecords, ShippingTickets, Invoices, etc. So you can see it’s a very robust business/entity model and *every one* of those items I listed has its own formal Business Object to load the respective data and do various pieces of work on the data.

Each business object is a separate PRG: Job.prg, Customer.prg, Contact.prg, JobLineItems.prg, JobShippingTickets.prg, etc… You get the picture. In all, I have about 40-50 of these Businesss Objects, each in a separate prg file).

Each UI form uses a loader function to instantiate each needed Business Object and all other related Business Objects. The loader functions make a simple call like:

|

1 2 |

loJob = NewObject("Job", "Job.prg") Return loJob |

Well, guess what I learned…. If you call NewObject() in this manner from within the FoxPro IDE, it runs in about .03 seconds. That’s plenty fast enough for me and my users, but my users don’t run the app from my IDE. They use a compiled EXE app running and in that case, the same NewObject() call takes .21 seconds! Please note that is *7* times longer for each call! Now remember that my forms will typically call this function about 30-40 times, so the results is that the forms will load with startup time of around 1 second in the VFP IDE, but it takes 7-8 seconds when running from an EXE. Are you kidding me? What is going on? (BTW – I discovered this using the Coverage Profiler and a good bit of snooping around in the log results.)

So, the offense that I independently discovered here is that NewObject() is really slow when running inside of an EXE, but very fast when running in the IDE. Can’t explain that, but it’s true.

The remedy was to get away from NewObject() (in by BO factory) and use CreateObject() instead, which also requires the prg(s) to be loaded into memory by way of SET PROCEDURE. So, instead of making 50 Set Procedure calls, I wrote a build task which merges all the separate prgs into a single prg and I added that to the project as an included file, then I added a Set Procedure command for this file in the app bootup sequence.

|

1 |

Set Procedure To BusinessObjects Additive |

I then changed my BO loader function to use CreateObject() instead of NewObject():

|

1 2 |

loJob = CreateObject("Job") Return loJob |

And, boom, I now get lightening fast creation of each BO even when running in the compiled EXE, and the form now loads in 1 second or so. Users = Happy.

Now, apparently I am not the only one who has ever discovered this oddity before (maybe you already knew it yourself as well), but as I was honing in on what I though the issue was, and what I thought the remedy would require, I happened to do a little Googling and found a 10-year blog post by some dude named Rick Strahl who had himself dealt with this issue before, and confirmed the same exact results and remedy that I landed on. Here is his post: http://www.west-wind.com/wconnect/weblog/ShowEntry.blog?id=553. You can also see more discussion on this topic at Fox.Wikis.com http://fox.wikis.com/wc.dll?Wiki~NewObject

Wow! This was a fun day. I love killing these little pests.

User Walter Meester posted a reply on UT asking if I had tried using Sys(2450,1) which is a flag which “Specifies how an application searches for data and resources such as functions, procedures, executable files, and so on.” (From the VFP Help.)

|

1 2 3 4 |

Parameters: --------- 0 = Search along path and default locations before searching in the application. (Default) 1 = Search within the application for the specified procedure or UDF before searching the path and default locations. |

So, I went back to the NewObject() way, and added the SYS(2450,1) suggested, and did careful, repetitive testing.

Result: Using Sys(2450,1) along with NewObject() does help a little bit when running inside an exe, *BUT*, it is still slower than using SET PROCEDURE along with CreateObject().

There are very distinctive and noticeable speed differences:

I hope this information helps your app performance in some way.

Here are the results I found from the Coverage Profiler for 1st Place and 3rd Place above. (I did not go back and run 2nd Place through the coverage proviler, but you can perceive where it would fall based on the time results listed above.)

I’ve done it again… Needed to setup an Ubuntu machine to run a simple CRUD web app I maintain for a small ministry group. The app is built on Rails 3.2.8, Bootstrap 2 UI, Ruby 1.9.3, and uses SqlLite for a local single-file database. I began writing the app in April 2012, with small tweaks here and there each year since. It was my first real Rails app, and it still runs fine today as a contact management system for the 5-person distributed team who use it to track their ministry contacts and record various interactions they each have with their members.

I needed to move the app off of my own network which has plenty of internet and power outages throughout the year, so I decided to go with a cloud hosted option and chose Digital Ocean and one of their small “droplet” containers running a ready-to-use Ubuntu 14.04 system. I am able to get by with 1GB RAM AND 20gb storage, so the cost is $10 per month. Once the droplet is created using their very simple web portal, you get nothing more than a Linux box that you can access via SSH, VNC, or a terminal client like Putty (I installed Putty on my Windows machine using Chocolatey). It’s all terminal windows from there; no GUI at all.

So, to install Ruby and Rails, I logged into my droplet via a Putty terminal session and I followed one of the Digital Ocean help docs on how to install Ruby. It has you install RVM, and from that you can install the Ruby version that your app needs (in my case ruby-1.9.3-p392).

Here’s the RVM setup link from Digital Ocean: https://www.digitalocean.com/community/tutorials/how-to-install-ruby-on-rails-on-ubuntu-14-04-using-rvm

Then I installed git so I could clone the source code repo of my app from my BitBucket account:

|

1 |

apt-get install git |

Then I had to install the Bundler gem so I could install all the other gems my apps needs to run:

|

1 |

gem install bundler |

To install the other gems in my app’s bundle list:

|

1 |

bundle install |

One snag… While all the gems were installing during “bundle install”, I got some error with the “nokogiri” gem, complaining that “libxml2 is missing“. I found a post on StackOverflow that showed my how to fix this problem:

http://stackoverflow.com/questions/6277456/nokogiri-installation-fails-libxml2-is-missing

|

1 |

sudo apt-get install libxslt-dev libxml2-dev |

Next, I ran “bundle install” again, and this time all gems installed fine.

So, now I tried to run my app using the built in Rails server:

|

1 |

rails s -p 3002 |

and I got an error that something in the app “Could not find a Javascript runtime“, so, back to StockOverflow from a google, and I learned how to fix this issue:

http://stackoverflow.com/questions/16846088/rails-server-does-not-start-could-not-find-a-javascript-runtime

|

1 |

apt-get -y install nodejs |

So, finally after all this hacking around in the terminal window, the app ran fine using the built in Rails “WEBrick” server, which is all I need to host my app for about 5 users.

I had used the Putty terminal app to remote into the VM droplet and start up the app using the rails command listed above, but when I closed the terminal session, the app process was killed and no one could access it from their web browsers. So, I found a StackExchange post that explained how to run a process in the background and tell it to ignore the shutting down of the terminal session so it would stay alive even after the terminal session ended. Here’e the link: http://unix.stackexchange.com/questions/479/keep-ssh-sessions-running-after-disconnection

This “nohup” command starts a process and tells it to “ignore the hang up command when the terminal session is ended“, and we can also add the the “&” command line flag which means “run the process in the background“. With this we can now exit the terminal session and the process will continue running to server web requests.

Command:

|

1 |

nohup rails s -p 3002 & |

As long as you are in the same terminal session as you started the process under, you can use the “jobs -l” command to see the running background process that you just started and see the process id. (Important: You’ll need the process id whenever you need to stop the Rail/WEBrick web server. Keep reading below…)

|

1 |

jobs -l |

Here’s the output:

|

1 |

[1]+ 3583 Running nohup rails s -p 3002 & |

Now you can quit the terminal session, but the app will still keep running, so users can access the web app from their browser.

If you close this terminal session and log in later with a new terminal session later, it’s a little harder to find the process id of the Ruby/WEBrick session that you started in the original terminal session. However, we can find the process id using this command (notice we are running the output from “ps” through grep and filtering with “ruby” so that we will see only the ruby processes. You could also filter on “rails” just as well:

|

1 |

ps aux | grep ruby |

Now, when you need to end (kill) the process to stop it, you have to know the process_id and use a special flag on the kill command, because the NOHUP command used to start the process will cause it to ignore the kill command. The command is: kill -9 [proceess_id]. (See notes above on how to learn the correct process_id.)

See this link for more info on this matter: http://stackoverflow.com/questions/8007380/how-to-kill-a-nohup-process

So:

|

1 |

kill -9 3583 |

A few days later I wanted to FTP upload some files to my Droplet on Digital Ocean. So, I found this really simple help post on the Digital Ocean community forum. It explains how you can use FileZilla and the SFTP secure protocol to easily connect to your Droplet from a FileZilla client session running on your local machine. There’s no configuration required in your droplet; it was built-in and ready to use on my Ubuntu 14.04 droplet that I based my VM on). Here’s the link: https://www.digitalocean.com/community/questions/how-i-get-ftp-login-with-filezilla

Yee-haw!!!

Now, let me get back to my .Net/C#/Sql server/Entity Framework/MVC etc…

All rolled and welded tubes are formed with very hard roll form tooling. The “tools” are actually forming rolls used to fold, bend, and shape the flat strips of material through various transitions until it reaches the final shape. The final shape can be square or rectangular tubing, round pipe, or a variety of other shapes used in construction and industrial applications.

After thousands of linear feet of these roll-formed shapes are produced, the forming rolls begin to wear in certain areas, even though they are made of hardened tool steel. Since the material is very hard, not just any machine shop can fix or repair the tools. It usually requires the services of a professional roll reconditioning company which can do the hard-turning, or regrinding using very specialized cutting tools and precision CNC lathes. It also requires highly skilled machinist who know what they are doing.

One service company who has many years of experience in making and reconditioning roll form tooling is Mill Dynamics in Birmingham, Alabama. The can also resharpen slitter knives and the rubber backup rolls used to slit the large steel coils into the correct width for the pipe and tube mills.

Many pipe and tube companies also use Mill Dynamics to make replacement roll shafts and bearing housings needed to run the pipe mill. They can duplicate existing shafts or produce new roll shafts from your detailed production drawings. If the bearing housings on your pipe or tube mill are beginning to show signs of wear, they can work with you to make new rolling mill shafts and bearing housings.